Coding projects for software developers: Let’s get some hands-on practice – Part 1

Hello! Below is an aggregated list of software projects I am putting together that we can code over the weekend or over a span of a few days on the side. This will help us become better developers by having continual hands-on practice working with established and new technologies in addition to learning new concepts.

We are often on the lookout for some good hands-on projects or real-world coding exercises that we can do on the side to enhance our skills, learn new tech and concepts, in addition to having a revision of what we already know. A hands-on approach to learning not only augments our confidence but also expedites our career growth.

Moreover, there is a real scarcity of resources that help us find good side projects to work on. Furthermore, if you run a search for software projects, you’ll find outdated resources suggesting things like coding a library management system, a blogging system, a calculator app, a task management system, a restaurant management system and so on. You get the idea.

Honestly, reading these projects SUCK THE SOUL OUT OF ME. They are dangerously dull and are suitable for learning basic OOP, not much than that. Hence, this handpicked, industry-relevant well-researched list that I am putting together ensuring the projects are for all skill levels (from beginner to intermediate to advanced). Additionally, I’ve ensured these projects and the tasks they contain are micro or moderately sized so as not to overwhelm us.

This is an ongoing list where projects will be continually added. If you find it helpful, I urge you to do two things:

1. Bookmark this page and share it with your network so that most of us can take advantage of it. If you are sharing this on your social handles, do tag me on LinkedIn or X (wherever you are) so that I get notified.

I’ve created a Discord server to get together and discuss our progress on weekend projects, staying accountable, including having discussions on industry trends and career growth. Join this as well; kickstart your next project and share your progress with us.

Sharing this list with your network and your progress would mean a lot and keep me motivated to extend this list actively.

2. If you have any ideas on new fun projects that can be added to the list, do connect with me on the above-stated social networks or send me an email to contact@scaleyourapp.com

With this being said, let’s get on with it.

Project 1: Analyzing railway traffic in the Netherlands with DuckDB

Tags: Database, Data Analytics, SQL

Level: Beginner, Intermediate

DuckDB is an open-source in-memory relational table-oriented OLAP (Online Analytical Processing) database focusing on low-latency in-memory data processing and analytics use cases.

They analyzed a real-world open Dutch railway dataset with DuckDB to showcase some of the product’s features.

The core functionality of the project includes running queries to find the busiest station per month (fun fact: it’s not Amsterdam :)), the top three busiest stations for each summer month, the largest distance between train stations in the Netherlands, querying remote data files, etc.

This is a good weekend project for those who want to get their hands dirty running SQL queries with an in-memory analytical database. Moreover, the linked resource guides you on how to do it so you won’t feel lost or left in the dark.

Key learnings from this hands-on exercise

You’ll learn:

To run analytical SQL queries on a real-world dataset.

To run SQL queries on remote datasets over HTTP and the S3 API.

About in-memory databases, processes and threads and the related concepts. I’ve discussed in-memory databases on my blog here. Do give it a read.

DuckDB does not run as a separate process but is totally embedded in the host process. This means it is designed to operate within the memory space and threads as the application that uses it as opposed to running as a separate independent DB process. This means no need for inter-process communication, which reduces complexity and provides better performance.

Since the database focuses on OLAP use cases, it leverages a columnar-vectorized query execution engine where a large batch of values, aka vectors, are processed in one operation as opposed to sequentially. This reduces the operational overhead significantly, requiring fewer CPU cycles.

You’ll find a discussion on OLAP wide-column databases on my blog here.

Traditional OLTP (Online Transaction Processing) databases like MySQL, SQLite or PostgreSQL process each row sequentially, thus requiring more compute.

OLAP use cases involve complex queries processing large data volumes and thus, performance becomes critical for them to keep the latency low.

So, for instance, if we need to compute the sum of a column of integers, vectorized execution will load a chunk of integers into memory to perform the sum operation as opposed to processing each integer one by one.

This reduces the number of function calls and loop iterations, which are relatively expensive. Modern CPUs are optimized for handling operations on batches of data. Vectorized execution leverages CPU cache, SIMD (Single Instruction, Multiple Data) instructions, and CPU parallelism to achieve performance.

Modern databases like CockroachDB and ClickhouseDB leverage the same vectorized execution approach for performance.

If you delve deeper into the database docs and my articles that I’ve linked above, you’ll learn all these backend engineering, distributed systems and system design concepts as well, which I believe will augment your knowledge dramatically.

Furthermore, since the dataset is open you can run the same coding exercise with any OLAP database of your choice.

Project 2: A web service managing real-time train running information

Tags: Backend, Web Service, REST API, Testing, Observability, Deployment, Go, Prometheus, Docker

Level: Intermediate, Advanced

Rijden de Treinen runs a backend service called GoTrain implemented in Go to receive, process and distribute real-time data about train services in the Netherlands.

The service is currently used in production accessible via the website and as a mobile app. The backend service ingests data streams containing info on the statuses of running trains, processes it, saves it in-memory and provides it to third parties via a REST API.

Through the REST API, clients can request a summary of all departing trains for a single station, information on the departing train, upcoming arrivals and so on.

You’ll find the details of this backend service on its GitHub repo. The project further integrates Prometheus for observability.

I’ve delved deep into observability here. Also, do go through this post to understand how Prometheus fits into the picture.

Key learnings from this project

The service ingests, processes and stores real-time data streams. By working on this project, you’ll understand this web service architecture and how data is ingested in real-time and processed in-memory for efficiency.

To go through the fundamentals of web architecture and application architecture, check out the linked posts that I’ve written on my blog.

Additionally, this in-memory processing article will help you understand how in-memory processing reduces latency. And since in this app the information dealt with is real-time, low latency is crucial.

You’ll also get insights into REST API implementation, structuring API endpoints, serving dynamic information to clients and integration with external data sources.

Furthermore, the repo also talks about data archiving for future analysis, queuing for asynchronous processing, containerization and setting up observability. I’ve linked the observability articles above for you to understand the fundamentals.

Containerization will help you understand deployment, scalability and the cloud-native architecture.

If you are a beginner, you can skip these additional components and focus on coding the data ingestion, in-memory processing and the REST API part. Once you are done with these, the rest of the things can be implemented at a later point.

At the point of mentioning this project here, the repo looks forward to increasing the test coverage. You can work on test cases as well. Additionally, you can also build custom client dashboards by extending the REST API.

Though the project is written in Go, it does not stop us from implementing the service in the programming language and the tech stack of our choice. It will be one hell of a learning experience implementing things from the bare bones. You’ll have excellent hands-on experience implementing a data-driven scalable application.

We can discuss things further in the Discord group.

Project 3: Build a family cash card application

Tags: Backend, Web Service, REST API, Testing, Java, Spring Boot, Spring Data

Level: Beginner

Building a simple family cash card application is a project facilitated by the Spring Academy that teaches us to build a REST API from the bare bones with interactive, hands-on exercises.

The app allows parents to manage allowances in the form of digital debit cards for their kids. It gives them ease and control over managing funds for their children.

Key learnings from this project

By coding this project, you’ll learn:

To implement a REST API

Fundamentals of API design and implementing API endpoints

To make the app secure by implementing authentication and authorization

Test-driven development

Persisting application data leveraging different application layers like the Controller and Repository

Software development principles like separation of concerns, loose coupling, etc.

Spring Boot fundamentals. You’ll be able to leverage the framework to implement other real-world projects.

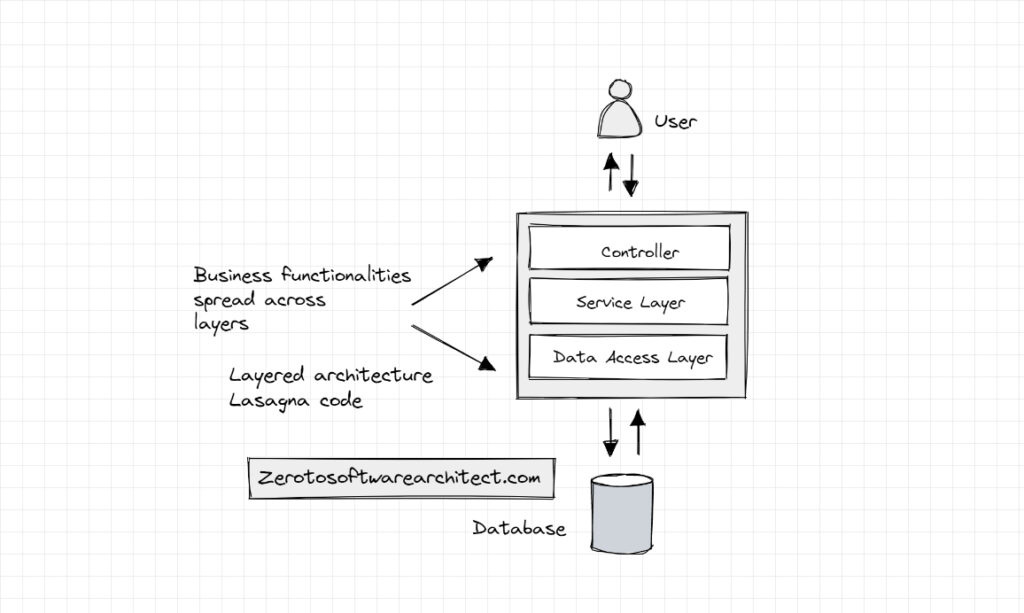

Layered architecture: Application layers

In most enterprise projects, you’ll find code split up into layers like the controller, service and data access layers. We can always add more layers to our code based on the requirements and the complexity of the project.

In the layered architecture, every layer has its specific role; for instance, the controllers will handle requests specific to a certain business feature or domain, the service layer will execute the business logic, the data access layer will communicate with the database and so on. These layers communicate with each other via interfaces to keep things loosely coupled and abstracted.

With this, specific layers, such as the service layer, wouldn’t worry about what is going on in the controller or the DAO layer; It just does its job, that is, executing business logic and passing the data across the DAO and the controller. Having a layered architecture helps implement the separation of concerns design principle.

With this architecture, a change in a certain layer of the code won’t impact other layers much. The layers are isolated. This facilitates easy development and testing, keeping the code maintainable and extendable.

Well, the project is in Spring Java; however, you can also implement it in the programming language and the web framework of your choice. It is important to understand the code flow, application architecture, and system design. Once these are clear, you shall be able to code the application in any language of your choice.

Check out this post on mastering system design for your interviews written by me on my blog.

Project 4: Build a batch application that generates billing reports for a cell phone company

Tags: Backend, Web Service, Java, Spring Boot, Spring Batch

Level: Beginner, Intermediate

Building a batch application with Spring batch is a hands-on project by Spring Academy that helps you code a robust fault-tolerant batch application that generates billing reports for a fictional cell phone company.

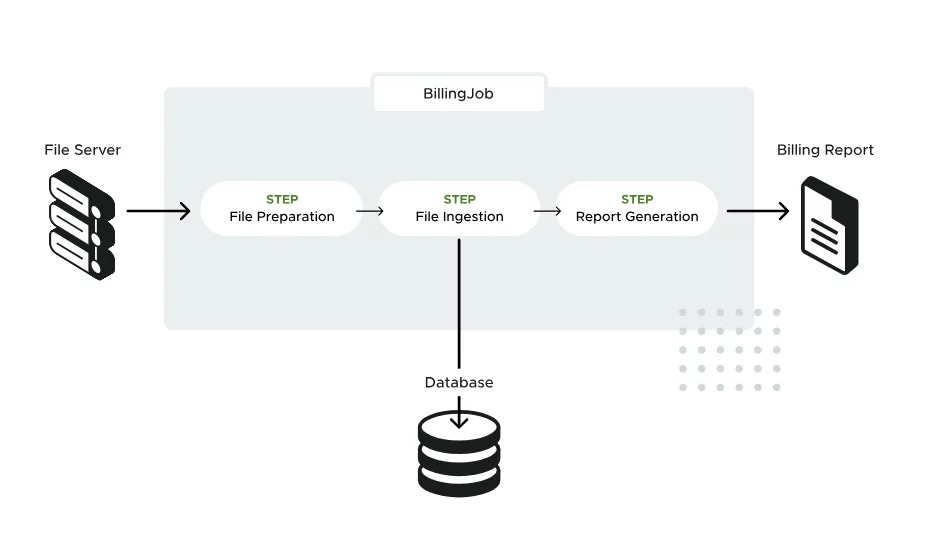

You’ll implement a batch service called the Billing Job as below:

The file preparation stage copies the file containing the monthly usage for the cell phone company customers from the file server to the staging server.

The ingestion component ingests the files into a relational database that holds the data that is used to generate the billing reports.

The report generation component processes the billing information from the database and creates a flat file for the customers.

Key learnings from this project

You’ll learn:

The fundamental concepts of batch processing in web services

Spring Batch and Spring Boot fundamentals

Application architecture involving the batch processing module

The importance of workflows in system design

To create, run and test fault-tolerant batch jobs

Batch processing

Enterprise systems extensively leverage batch processing to run automated processes on the backend to fulfill their day-to-day business requirements.

Some of the core examples of this are billing and payroll analysis, medical bills and other information-processing tasks, data backups, report generation, transaction processing, sales inventory updates, etc.

Building a batch processing application from the bare bones will give you an excellent insight into the functionality of such systems and an edge as a backend developer.

Project 5: Build a Hackernews clone backed by a GraphQL API

Tags: Backend, Web Service, API, Frontend

Level: Beginner

This free and open-source tutorial helps us create a GraphQL app from zero to production. The project entails building a Hackernews clone from the bare bones, following best practices, and using the programming language and framework of your choice.

Key learnings from this project

You’ll learn the fundamentals of GraphQL and build a full-stack application from the bare bones.

Project 6: Build an SQL-based algorithmic trading system with Redpanda and Apache Flink

Tags: Backend, Data Processing, Data Streaming, Flink, Redpanda, SQL, Python

Level: Intermediate, Advanced

Redpanda is an open-source data streaming platform like Kafka. It provides a comprehensive guide on how to build an SQL-based algorithmic trading system with Redpanda, Apache Flink and some finance APIs.

The application or the service enables the end users to automatically make investment decisions using market data, including executing trade programmatically.

Key learnings from this project

You’ll learn:

Data and stream processing fundamentals, including an architectural pattern called event sourcing

Integrating your code with external APIs

Building fast low-latency systems capable of quickly reacting to market events

Building Apache Flink applications leveraging Redpanda

An emerging data streaming technology

Being aware of the fundamentals of Redpanda is a prerequisite to this course. You can take a fundamentals hands-on course on getting started with Redpanda here. Furthermore, the project does not demand prior knowledge of Flink or algorithmic trading.

Redpanda

Redpanda is an open-source data streaming platform built from the ground up in C++ for performance.

As per the benchmarks, Redpanda delivers at least 10x faster tail latencies than Kafka and uses up to 3x fewer nodes to do so.

The platform uses a thread-per-core architecture leveraging the Seastar framework to ensure high throughput. ScyllaDB, written in C++, also leverages the Seastar framework to be highly asynchronous with a shared-nothing design. It is optimized for modern cloud multiprocessor multicore NUMA cloud hardware to run millions of operations per second at sub-millisecond average latencies.

I’ve discussed the ScyllaDB shard per code architecture on my blog here. Do give it a read if you wish to delve into the details.

Redpanda, being a data streaming platform, fits best with the algorithm trading system as it enables us to continuously process data streams obtained from the finance APIs.

Apache Flink

Apache Flink is an open-source stream processing framework with high-throughput, scalable data processing capabilities, supporting both batch and real-time data processing. It is leveraged by a bunch of big guns in the industry.

Flink supports a wide range of use cases like computing the average price of stocks using a sliding time window or something simple, like a 1:1 transformation of a single data point.

Algorithmic trading applications make heavy use of time-series analysis, and the framework’s windowing capabilities are leveraged to implement the project features. Furthermore, Flink’s state checkpointing and recovery features prove to be pretty helpful as well in coding such use cases.

Event sourcing

When implementing systems dealing with time-series data as opposed to just computing the current data snapshot, the entire history of events is processed to achieve an outcome.

The time-series data helps in understanding how the market changes over time, different trading strategies by replaying respective events, and so on.

These requirements are addressed by an architectural pattern called the event sourcing pattern. Event sourcing is a pattern where the changes that occur over a period of time are stored immutably as events in an append-only log. This log acts as a record, providing more comprehensive insights into the system and when replayed, helps us reproduce the past system state deterministically.

The technologies used in the project above are open-source and if you implement it from scratch it will be a pretty good hands-on practice for you.

Project 7: Build a low-latency video streaming app with ScyllaDB & NextJS

Tags: Backend, Web Service, Cloud, ScyllaDB, NextJS, TypeScript

Level: Intermediate, Advanced

ScyllaDB, in their blog article, discussed a video streaming app with minimal features such as listing videos on the UI that the user started watching, continue watching a video from where it was left off, display a progress bar under each video thumbnail, etc.

You’ll find the GitHub repo for the project here.

ScyllaDB is an open-source NoSQL wide-column database similar to Apache Cassandra. I’ve discussed NoSQL DB architecture with ScyllaDB shard per core design here. Do give it a read if you wish to delve deeper into it.

Earlier, Disney+ Hotstar replaced Redis and Elasticsearch with ScyllaDB to implement the ‘Continue Watching’ feature in their service, I’ve briefly written about it, do give it a read as well.

The above-linked resources will provide you with a background on ScyllaDB.

Key learnings from this project

Real-world video streaming services are complex with a lot many features, in addition to storing videos in cloud object stores. However, the above-stated project is a minimal project, giving us insights into how a low-latency NoSQL store can be leveraged to handle the large-scale data storage and retrieval requirements of a video streaming application.

You’ll get insights into the data modeling for a video streaming service, including how, ideally, the data modeling of an application or for a certain feature should start with having an understanding of the system queries and data retrieval patterns as opposed to first creating the schema and then understanding the query patterns.

Distributed aggregates & user-defined functions

The project also entails the use of distributed aggregates and user-defined functions. Distributed aggregates help us run aggregate operations (like SUM, COUNT, AVG, etc.) across a distributed system.

In distributed databases, when the data is spread across multiple nodes, with distributed aggregates, we can perform aggregation operations, by leveraging the parallel processing capabilities of the database, without having to move the required data to a single location/node.

Related read: How ScyllaDB distributed aggregates reduce the query execution time up to 20x

User-defined functions, on the other hand, are custom functions that extend the functionality of the database. These can be implemented in multiple programming languages as supported by the DB.

Furthermore, the project leverages NextJS & TypeScript, so you’ll get some insight into those as well.

Speaking of video streaming services, below are a few recommended reads from my blog that I’ve written earlier. Do give them a read.

How does YouTube store so many videos without running out of storage space?

How does YouTube serve videos with high quality and low latency? An insight into its architecture.

How Hotstar scaled with 10.3 million concurrent users – an architectural insight

Project 8: Code a TCP/IP server from scratch

Tags: Backend, Java, Networking, Web

Level: Beginner, Intermediate

In two detailed posts, I've implemented a single-threaded and a multi-threaded TCP/IP server from the bare bones in Java. You can follow the posts to implement your TCP/IP server either in Java or the programming language of your choice.

The single-threaded server handles client requests sequentially in a blocking fashion, one request at a time. In this scenario, all the subsequent or concurrent client requests are queued until the primary thread of execution is free to handle the subsequent client request.

In contrast, a multithreaded server improves our server's throughput by enabling it to concurrently handle a significantly higher number of client requests in a stipulated time in both blocking and non-blocking fashion.

Key learnings from this project

The TCP/IP protocol

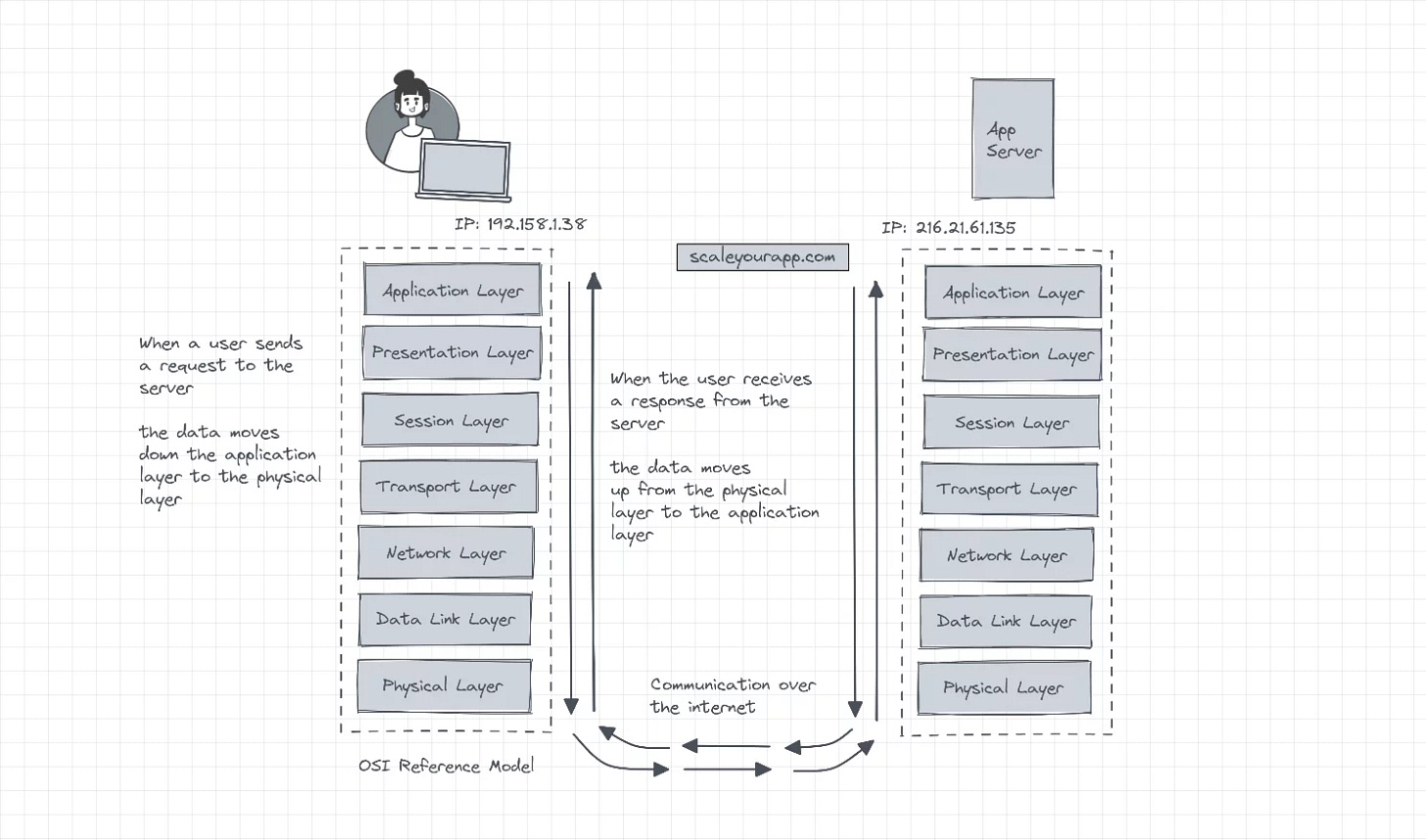

The TCP/IP (Transmission Control Protocol/Internet Protocol) model is the core of communication that happens over the web and is a suite of data communication protocols.

It abstracts away most of the intricacies and complexities of network communication from our applications. This may include handling data congestion, ensuring data delivery with accuracy and integrity, averting the network from being overwhelmed with excessive data with the help of different network algorithms, etc.

As opposed to seven, which the OSI model contains, the TCP/IP architecture has five layers. They are the application layer, transport layer, internet layer, network interface layer and physical hardware layer.

I've written a detailed post on the IP layers and the TCP/IP model on my blog. Do check it out. It will give you an overview of the fundamentals before you get down to writing code.

TCP/IP & distributed systems

A TCP/IP server and its cluster forms the core of almost all distributed systems. Distributed systems like Kafka, Redis, etc., rely heavily on this protocol to achieve reliable communication, flow and congestion control, network partition handling and more.

Redis

If we take Redis, for instance, Redis clients connect to Redis servers using TCP connections. Each client connection is handled by a separate socket, ensuring commands and their responses are reliably exchanged. Redis typically uses long-lived TCP connections to handle multiple requests and responses, minimizing the overhead of establishing connections repeatedly.

Its RESP (REdis Serialization Protocol) is designed to work efficiently over TCP. Using TCP, RESP messages are sent from the client to the server and vice versa, ensuring reliable and ordered delivery.

In the cluster mode, multiple Redis nodes communicate with each other over TCP to manage data partitioning and replication. Nodes exchange information about the cluster state, data distribution, and more.

Redis further uses TCP connections to replicate data from master nodes to slave nodes. This ensures data redundancy and improves fault tolerance. Initial synchronization and ongoing replication traffic are continually transmitted over TCP, ensuring that the replicas stay consistent with the master.

Kafka

Kafka producers and consumers connect to Kafka brokers over TCP connections, ensuring the data is reliably produced and consumed. They maintain persistent TCP connections to brokers, reducing the overhead associated with repeatedly establishing connections, just like Redis.

Kafka uses a leader-follower replication model where the leader broker sends data to follower brokers over TCP. This ensures that data is consistently replicated across the cluster.

Moreover, its binary communication protocol operates over TCP as well and is optimized for high throughput and low latency.

This gives an idea of how critical TCP/IP is to implementing efficient, reliable and scalable distributed systems.

Intricacies of client-server communication

When implementing a TCP/IP server, you'll understand the intricacies of network communication along with gaining good insight into how servers communicate/exchange data over the web. In addition, you’ll have an understanding of essential concepts involved such as IP addresses, ports and sockets.

Furthermore, you'll understand multiple approaches we can leverage to make our server handle concurrent connections based on the use case and the pros and cons of each approach.

There are primarily five ways to make our server handle concurrent connections:

Spawning a new thread for every client request

Thread pooling: Leveraging existing threads from the pool to serve subsequent requests

Hybrid approach: A mix of spawning new threads and pooling

Non-blocking asynchronous I/O: Enables our server to handle client requests in a non-blocking fashion

Event-driven approach: Closely related to the asynchronous, non-blocking approach; here, the server responds to events and processes the requests with event loops and callbacks.

I've touched upon these approaches in implementing a multithreaded TCP/IP server post. Modern servers leverage a mix of multiple approaches to achieve the desired behavior. They strike a balance between resource utilization, responsiveness and scalability.

Developers monitor the memory usage, tune the code continually for optimum performance and adapt to changing workload conditions. This may include studying the average number of concurrent requests, processing time per request, incoming request patterns like frequency of traffic spikes, system resource consumption such as CPU, memory, I/O capacity, scalability requirements, and so on.

This project will be a stepping stone into the realm of systems programming. I've created a roadmap for distributed system programming here, in case you want to check it out.

Though the code I've written is in Java, you can learn to code distributed systems in the backend programming language of your choice with CodeCrafters (Affiliate).

CodeCrafters is a platform that helps us code distributed systems like Redis, Docker, Git, a DNS server, and more step-by-step from the bare bones in the programming language of our choice.

It's designed to help developers learn how to build complex systems from the ground up, focusing on teaching the internals of distributed systems and other related technologies through hands-on, project-based learning.

Each project is broken down into stages, with each stage focusing on implementing a specific feature or component of the system. Do check it out and kickstart your hands-on systems programming learning.

If you decide to make a purchase, you can use my unique link to get 40% off.

More projects are coming soon to this list. My next article will be on how to wrap our heads around a GitHub repository. If you wish to be notified of my future posts, including the new project additions to this list, do subscribe to this newsletter (if you haven’t yet). Cheers!

Top-notch. Thanks for putting together a researched list like this.

Can you share a new link of the discord? Unfortunately, the link has expired.