Kickstart your distributed systems programming and architecture learning with this post

Distributed systems is a term that is used across layers in the compute stack. For instance, large-scale services like Uber, Netflix, YouTube and the like are called distributed systems, distributed services, or more commonly, web services.

Services like these, handling millions of concurrent users at any point in time, are highly available, scalable and reliable. Under the covers, they are powered by scalable distributed systems such as Kafka, Redis, MongoDB, Cassandra, and so on, which enable these services to process millions of concurrent users across different cloud regions globally with high throughput, consistency, reliability, and availability.

Distributed is a term that is applicable at multiple levels. Systems such as Kafka, Redis, etc., are distributed as they run across clusters comprising thousands of nodes deployed globally. They are distributed at the cluster or the infrastructure level.

On the contrary, consumer-facing services like Netflix, Uber, etc., are called distributed in nature as they comprise thousands of microservices deployed across the globe, running as a whole, achieving the desired functionality.

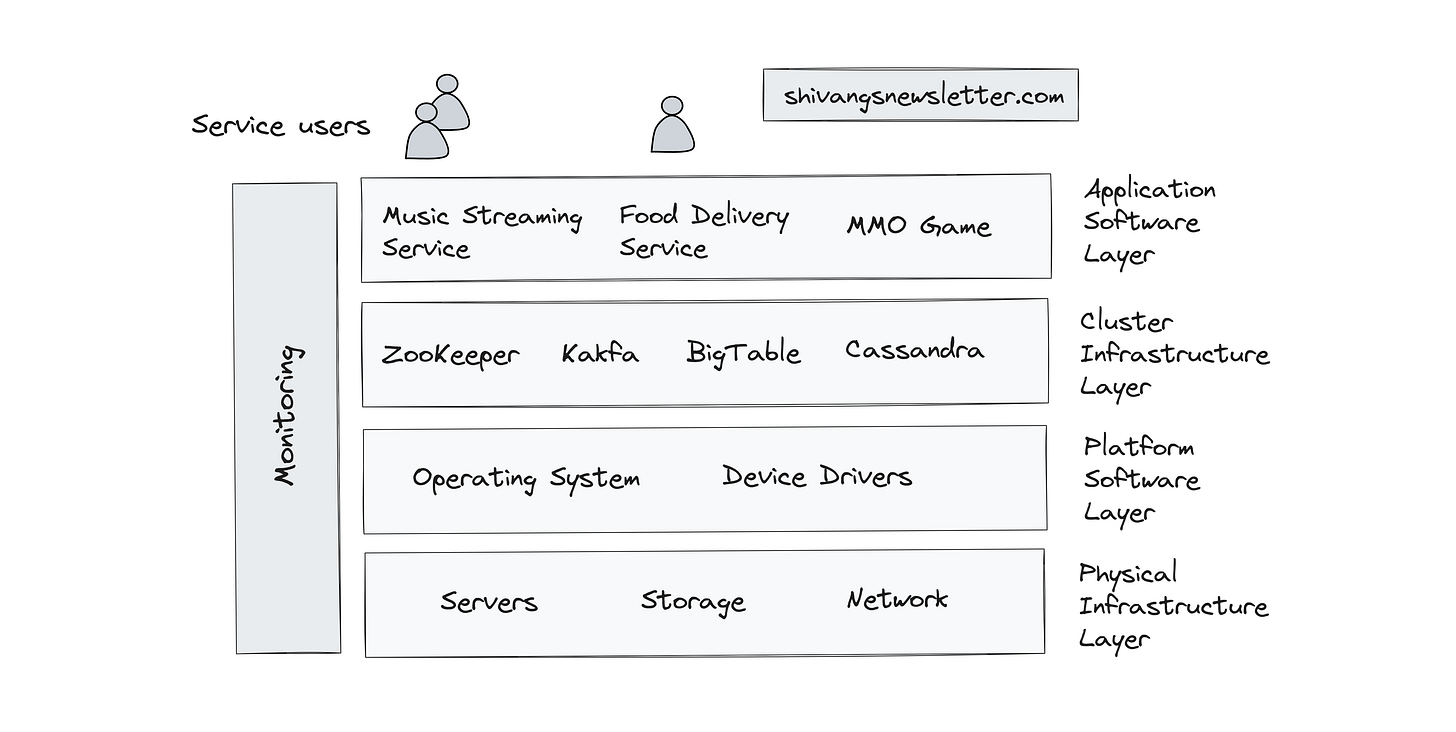

Now, let's quickly look at the distributed compute/infrastructure stack, comprising multiple layers.

Distributed compute stack

The top layer in the stack is the application software layer, which holds the consumer-facing web services like Uber, Spotify, Netflix and so on.

The application layer is followed by the cluster infrastructure layer that holds systems, powering the above running services, like distributed databases (Redis, MongoDB, Cassandra), message brokers (Kafka), distributed caches (Redis), distributed file systems, cluster coordination systems (ZooKeeper, Etcd.), etc.

These systems take care of things like distributed data coordination (eventual consistency, strong consistency, etc.), availability, scalability, and so on, without the need for the application programmer to worry too much about the underlying intricacies of how clusters dynamically adjust their size, how low-level memory operations are executed, how the clusters ensure fault tolerance and such.

To delve into the details of how large-scale services maintain data consistency when deployed across the globe, check out my applying DB consistency in a web service post.

Furthermore, a case study post on distributing our database in different cloud regions globally to manage load & latency is a recommended read.

The cluster infrastructure layer is followed by the platform software layer, which holds the operating system, device drivers, firmware, virtualization setup, and such. This layer provides an abstraction enabling the distributed systems to interact with the underlying hardware they run on.

We can further split the OS and the virtualization layer into two, but for simplicity, we’ll let it be clubbed, for now.

If you wish to delve into the specifics of infrastructure, deployment, and virtualization, my two below posts are highly recommended reads:

Why doesn't Cloudflare use containers in their Workers platform infrastructure?

How Unikraft Cloud reduces serverless cold starts to milliseconds with unikernels and microVMs

The platform software layer is followed by the physical infrastructure layer, which consists of the compute servers mounted in a server rack, physical storage consisting of the hard drives and Flash SSDs, the RAM attached to the compute servers, and the data center network that connects all the physical infrastructure.

Furthermore, all the layers of the compute stack have a vertical layer applied to them, which is the monitoring layer. This layer contains monitoring code or systems that ensure good service health and continual uptime of components running in all the layers.

Keeping track of metrics like server uptime, performance, latency, throughput, resource consumption, etc., is crucial for running performant software. This is more commonly known as observability.

I've published a detailed post on it. Do check it out.

Systems programming

Now that we have an idea of the difference between a distributed web service (running at the top of the compute stack) and a distributed system that runs across nodes in a cluster, interacting with the hardware. Let's step into the realm of systems programming.

Systems programming primarily entails writing performant software that directly interacts with the hardware and makes the most of the underlying resources.

When we run this software on multiple nodes in a cluster, the system becomes distributed in nature, entailing the application of multiple concepts.

I have started a crash course on building a distributed message broker like Kafka from the bare bones. Do give it a read to begin with your distributed systems programming learning.

I am also listing the concepts below that are ideally involved when we implement a distributed system. PS Though I am listing the concepts below for reference, we may not necessarily have to know them all to start coding one for learning.

Concepts

Distributed systems fundamentals

The nodes of our distributed system communicate over the network and the system should address the complexities of network communication, concurrency, data consistency and fault tolerance.

This requires knowledge of networking protocols such as TCP/IP, HTTP, serialization mechanisms and message-passing techniques like gRPC, REST, etc.

To keep the data consistent between the nodes, including keeping the nodes in sync, we need to understand consensus algorithms like Raft and Paxos, as well as the CAP theorem, in addition to understanding the data consistency models and distributed transactions.

To manage distributed transactions in a scalable and resilient way, distributed services leverage techniques such as distributed logging, event sourcing, state machines, distributed cluster caching, and such.

To implement high availability, we need to be aware of concepts like redundancy, replication, retries, and failover. For scalability, several load-balancing strategies are leveraged, such as sharding, partitioning, caching, implementing dedicated load balancers, and so on.

These are foundational distributed systems concepts that are applicable when implementing a scalable, available, reliable, and resilient system.

In my crash course, I've started with the bare bones of what a cluster node is and continued to code a commit log running on a single node. More posts discussing the distributed scenarios are coming soon.

To understand the TCP/IP protocol, and things like IP address, ports, and sockets, including how servers function, I've implemented a single-threaded and multi-threaded server in Java. Do check out the below posts:

Implementing a single-threaded blocking TCP/IP server

Enabling our server to handle concurrent requests by implementing a multi-threaded TCP/IP server

System design and architecture

System design and architecture fundamentals go along with the distributed systems fundamentals. These concepts hover more around implementing the services in the application layer at the top of the compute stack we discussed before.

These are the web architecture and cloud fundamentals, including things like the client-server architecture, basics of message queues, databases, load balancing, microservices, different data consistency models, API design, communication protocols like REST, RPC, etc., concurrent request processing, data modeling, design patterns like the publish-subscribe, event sourcing, saga, circuit-breaker, leader-follower, CQRS, and so on.

Moreover, this system design and distributed systems fundamentals knowledge is crucial when preparing for our system design interviews.

If you wish to get a grip on the fundamentals starting from zero in a structured and easy-to-understand way, check out my zero to system architecture learning path, where you will learn the fundamentals of web architecture, cloud, and distributed systems in addition to learning designing scalable services like YouTube, Netflix, ESPN and the like.

In addition, I've added several system design case studies to my newsletter. Do give those a read as well.

Systems programming language

Ideally, distributed systems are implemented in programming languages that provide low-level control over hardware resources such as memory, CPU, and I/O. This is key for writing performant systems.

Popular systems programming languages are C, C++, Rust, Go, Elixir, and Java (to some extent).

Distributed systems often require concurrency and parallelism support for multitasking. Go and Java provide robust concurrency constructs to handle concurrent scenarios effectively.

C, C++ and Rust fit best for writing ultra-low latency systems where memory efficiency and direct hardware access are critical, such as databases, caches, web servers, distributed storage, and so on.

Go has built-in support for concurrency. It also has a garbage collector and offers good performance. Systems such as Kubernetes, Docker, Etcd and Cockroach DB are written in Go.

Elixir is designed for writing highly concurrent, distributed systems with a focus on fault tolerance and lightweight processes.

Java offers built-in support for concurrency (supporting both I/O and CPU-intensive applications), provides networking APIs, and has a mature ecosystem. Java code can be deployed across different environments supporting JVM.

Some of the distributed systems implemented in Java are Apache Hadoop, ZooKeeper, Elasticsearch, Apache Storm, Flink, Cassandra, HBase, and Apache Ignite.

Kafka is written in Scala, which is a JVM-based language and is interoperable with Java. Many of Kafka's libraries and connectors are written in Java.

Though performant systems can be implemented in Java, but as I mentioned before, when we need ultra-low latency in our systems, languages such as C, C++, and Rust are preferred. Java is more largely used to implement enterprise services than being leveraged as a systems programming language.

However, if you just want to learn the underlying concepts for knowledge and not code systems to be used in production, you can code these systems in any programming language of your choice.

I've implemented the single-threaded and multi-threaded TCP/IP servers in Java and I am using Go to implement the message broker.

Systems and services can be implemented in any language as long as they fit the requirements. More importantly, understanding the underlying concepts is crucial.

Practice writing system software with CodeCrafters in the programming language of your choice

CodeCrafters is a platform that helps you develop a good concept in writing system software. With their interactive, hands-on exercises, you learn to code distributed systems like Git, Redis, Kafka, and more from the bare bones in the programming language of your choice.

You can follow my newsletter posts for detailed discussions on systems programming and architecture and for hands-on-practice, you can begin your learning with CodeCrafters to become a better engineer.

Check out their programming challenges and if you decide to make a purchase, you can use my unique link to get 40% off (affiliate).

Data structures and algorithms

Along with distributed systems fundamentals, we should be aware of the fundamentals of data structures, networking and OS.

Again, as I mentioned before, we need not know everything; we only need to know what is required as we move forward. I am listing the topics for reference.

Data structure and algorithms are crucial for building performant systems. When our systems get hit with traffic in the scale of millions, data structures and algorithms enable our systems to process data and requests efficiently at blazing speed.

For instance, knowledge of B-trees and hash tables is crucial for implementing efficient data storage and retrieval systems. Merkle trees are leveraged for data synchronization in a distributed environment.

Bloom filters can help improve lookup times, reduce memory consumption, and optimize query performance in distributed databases and caches.

Algorithms, such as distributed consensus algorithms, help us manage varied levels of data consistency across the nodes. Consistent hashing is leveraged to distribute and replicate data efficiently across the cluster.

Techniques like weighted routing and other dynamic routing algorithms help optimize resource utilization, system performance, and so on.

You get the idea. DSA is foundational in building optimized and performant systems.

Networking

Knowledge of low-level networking, including socket programming and how network communication happens in a distributed environment, is crucial in implementing distributed systems. Knowing network protocols such as TCP/IP, HTTP, UDP, etc., helps us understand how data is transmitted across machines/nodes in a network.

Distributed systems at a low level rely on protocols such as TCP/IP to reliably transmit data across a distributed environment, and HTTP we all are aware that the web runs on it. It's the fundamental protocol for client-server communication.

Furthermore, we should understand the network topologies that enable us to create scalable software capable of functioning with optimized network latency and minimal communication overhead across different cloud regions and availability zones globally.

Operating systems

Understanding memory management concepts such as virtual memory, paging, memory allocation, resource management, inter-process communication and scheduling algorithms helps optimize memory usage and task execution in distributed systems.

Knowledge of processes, threads, locks, semaphores, monitors, OS user space, kernel space, etc., enables us to code efficient systems, ensuring optimum resource utilization. This subsequently augments our systems' throughput and responsiveness.

For instance, Kafka's performance relies on the implementation of the zero-copy optimization principle. It bypasses the file system cache and interacts directly with the disk for read writes. This approach minimizes latency and maximizes throughput by reducing unnecessary overhead associated with file system caching.

You'll find the specifics on this in my newsletter as I delve deeper into the message broker crash course. Stay tuned.

Furthermore, understanding file systems helps us code systems intended to handle large-scale data storage and retrieval like Google BigTable, Hadoop, etc.

I actively share new insights on the domain on my social media handles. Do follow me on LinkedIn and X to receive insights and updates in your home feed.

Additionally, you can also subscribe to this newsletter to receive the content in your inbox as soon as it is published.

Deployment & Cloud

Finally, our software needs to be deployed in a distributed environment to test its functioning. This may require the use of technologies such as Docker, Kubernetes and possibly a cloud platform like AWS, GCP or Azure. This requires bare-bones knowledge of the cloud.

If you are hazy on the cloud fundamentals, my zero to system architecture learning path has got you covered. It contains a dedicated course that covers cloud fundamentals, including concepts like serverless, running complex workflows, vendor lock-in, cloud infrastructure, how large-scale services are deployed across different cloud regions and availability zones globally, deployment workflow and the associated infrastructure and technologies.

Now that we've discussed the list of topics we need to be aware of to implement a distributed system, you can proceed with the first post on the message broker crash course.

If you found this post insightful, do share it with your friends for more reach. You can find me on LinkedIn.

I'll see you in the next post.

Until then, Cheers!