System Design Case Study #4: How Shopify Implemented Read Consistency Across Their Database Replicas For A Consistent User Experience

Shopify leverages DB replication for redundancy and failure recovery, in addition to setting up read replicas as an alternative read-only data source for read operations. This reduces the read load on their primary database nodes as the read requests can be routed to the read replicas and the primary nodes can have more bandwidth to handle more write operations. This enhances the system's request throughput and overall performance.

Master nodes and the read replicas setup works great, but having a number of read replicas working along with the primary nodes introduces some replication lag in the system since syncing writes to all the read replicas requires some time.

As the replicas are being synched, requests fetching data from the read replicas might get inconsistent data since, at one point, all read replicas might not be strongly consistent.

Please go through my former newsletter posts for more context. I've delved deep into data consistency and data distribution across different cloud regions:

1. Distributing Our Database In Different Cloud Regions Globally To Manage Load & Latency.

2. Understanding Database Consistency Levels And Applying Them To A Single Web Service.

Since the distributed read replicas are not strongly consistent, in a scenario where data for a certain query is fetched from multiple replicas, assembled and returned to the users, the results of these queries fired by multiple clients will be highly unpredictable.

And what if, based on these results, the users perform further writes in the application? This will cause the system to be in a highly inconsistent state, leaving the end user confused and our support team with an inbox cluttered with customer whines.

To avert this scenario, Shopify needed a consistent system. They contemplated different consistency models, such as strong consistency, causal consistency, monotonic read consistency, etc., based on their use case.

Strong Consistency

Strong consistency suited best to have a strongly consistent system without the user seeing subtle variations in data. But it also meant synchronizing all the read replicas synchronously after a certain write was performed on the master node.

If the synchronization failed or had an issue, the write was unsuccessful. This consistency model wasn't the best choice since the synchronous updation of the replicas added additional latency to the write operations, undesirably negatively affecting the system's performance.

Causal Consistency

They next considered casual consistency, implementing it via GTID (Global Transaction Identifier). Every write on the primary server would have a unique GTID that would be replicated across the replicas. And based on the presence of the specific GTID, the system would ascertain the staleness of the data on a certain node by comparing it with the state on the primary node.

The issue with this approach was running additional logic on all the replicas that would stream data to a certain component of the system that would decide which DB node to route a certain query actively comparing the states of different read replicas and the master nodes. This was clearly additional overhead and the level of complexity was unnecessary.

Monotonic Read Consistency

They finally settled on monotonic read consistency based on their use case and with acceptable trade-offs. Monotonic consistency enabled the system to provide a consistent timeline of reads, though not in real-time.

The key to implementing this consistency was to route the client requests to the same DB node for the subsequent queries. When a certain client always hit the same DB node, they would always get consistent data wrt that node.

Initially, when the client sent a request to the database cluster, the system, with some considerations like latency, load balancing, etc, randomly sent the request to the DB nodes.

But now, since the requests had to be routed to the same node for the subsequent reads, the logic of routing requests had to be tweaked.

Adding A Unique Identifier To Every Client Request

To achieve this, Shopify added a UUID (Universally Unique Identifier) to every request. This ID is passed with every client request to enable the system to identify a series of requests from a certain client.

UUIDs are leveraged in distributed systems to uniquely identify information in a distributed computing environment. With UUIDs, we can ensure the information is globally unique, and it's highly unlikely that two UUIDs will be the same.

In distributed systems, they are used in multiple use cases, such as keys for distributed DB records, generating session IDs, security tokens, uniquely identifying messages, transactions, traces, and so on.

Shopify tagged the request UUID with every request and sent it within the query comments as a key-value pair:

/* consistent_read_id:<some unique ID> */ SELECT <fields> FROM <table>Why the UUID is passed in the query comments as opposed to being a part of the query is not explained in their engineering post. Maybe, based on their system, it would be easier to parse and extract the UUID from the comments or the comments could hold more metadata, such as user IDs, timestamps, etc., when required in the near future.

Hashing The UUID And Routing the Client Requests To The Same DB Node

To confirm that a client with requests with a certain UUID always hit the same DB node for read consistency, the UUID (consistent read ID for every client request) is hashed to get an integer value. A modulo operation is performed on this integer using the number of nodes as the divisor to get the DB node the request will hit.

The result of the modulo is the DB node index that the request with that specific UUID will hit. This is how Shopify ensured consistent reads across their database replicas.

Source: Shopify Engineering

Key System Design Learning From this Case Study

This post focuses on how Shopify contemplated different tradeoffs across different consistency levels, picked the monotonic read consistency as it fit their business requirements and eventually implemented it.

To ensure the clients' requests with unique IDs hit the same DB nodes for subsequent requests, they leveraged a technique called hash-based routing or hash-based load balancing, as I discussed above.

Hash-based Routing/Load Balancing

Hash-based routing ensures even distribution of incoming requests across a cluster of servers, in addition to routing data or requests to the same server nodes in a deterministic fashion. The process is the same as what Shopify implemented in their system, ensuring read consistency by routing client requests with unique IDs to the same servers.

It involves feeding a certain unique request attribute into the hash function to generate a unique hash, which is then mapped to a certain server by taking the module of the hash with the value of the total number of servers.

The hash function that is chosen is deterministic in nature and always produces the same hash value for the same request attribute, ensuring that the request always gets routed to the same server. In addition, the even distribution of requests across the cluster depends on the hash function as well.

Load balancers often leverage this technique to implement sticky sessions. Sticky sessions ensure that for a certain client session, all the requests are routed to the same server. The unique request attribute here is the session ID that gets routed to the same server holding the session state for that particular client, including the subsequent requests from the same client.

The Hash-based routing technique works well to route the requests to the same servers but has an issue if the servers get added or removed from the cluster dynamically. In this scenario, the system will route the request to a different server if a certain server that was supposed to receive a particular request goes down.

When the nodes get updated dynamically, the modulo operation has to recalculate the complete request mapping, creating a lot of confusion in the existing system state. And this happens continually as the servers get updated in real-time.

To tackle this, we leverage another hashing technique called consistent hashing.

Consistent Hashing

Consistent hashing is another hashing technique that distributes requests or data across a cluster of servers, minimizing the need for remapping when the number of servers changes dynamically. This provides more stability to request routing in a dynamic environment.

This is achieved by arranging the servers in a virtual ring called the hash ring. Servers are arranged or mapped on the hash ring by feeding their unique identifier (it can be their network address or another distinctive attribute) into a hash function.

The module of the generated hash value is taken with the total number of positions or slots in the ring to map it onto the hash ring. The position of each server/node on the ring is determined by its hash value. The process of calculating the server index via modulo is the same as the hashing-based routing approach. The difference is that in this scenario, both the nodes and the requests are hashed and mapped onto the ring.

The total number of positions or slots in the ring is based on the design considerations of our consistent hashing implementation. A higher number of positions ensures a fine-grained distribution, but this may have compute overhead during the lookup process. In contrast, if the number of positions is less, the computations will be less, but the load balancing might be uneven.

One common approach is to set the number of positions based on the number of server nodes available. So, for instance, if we have 30 servers in our system, we might have the total number of positions as a multiple of three times the number of servers; that would be 90 positions/slots.

It's more about experimentation and evaluation during implementation and seeing what fits best.

Similar to how the nodes are mapped onto the hash ring, the unique attribute of the client request is fed into the hash function to generate a hash. This hash value is then mapped onto the same hash ring using the same modulo approach.

When the client sends a request, the system maps the request to the server by finding the position on the ring where the hash value of the request falls and then moving clockwise and locating the nearest server in the ring.

So, as opposed to a request directly hitting a certain DB node based on the modulo output, like in hash-based routing, in consistent hashing, it's more about locating the hash location in the ring and finding the nearest servers in the ring. This decouples the requests and the DB nodes, making the system more dynamic.

When a new node is added or removed, the system adjusts the position of the updated node in the ring using the same hashing approach without the need to remap all the servers in the cluster. Most requests are still routed to the same nodes.

In real-world systems, the servers involved in consistent hashing are monitored in real-time to avoid any uneven distribution of load. If the issue exists, the ring is reconfigured for a more even distribution. Moreover, it is essential that the hash function that is chosen is deterministic and evenly distributes hash values across the ring.

The upside of this approach, in contrast to hash-based routing, is that it adapts to the cluster changes, which is critical in running real-world systems.

Hash-based routing that Shopify leveraged fits well in static environments where the number of servers is ideally static or doesn't change much often.

Consistent Hashing In Distributed Systems

Consistent hashing is extensively used in real-world distributed systems. Slack leverages it in its messaging architecture to route channel messages to different nodes in the cluster. Each channel has a unique ID, which is used as a key to hash to assign the channel to a unique node in the cluster. This ensures the messages for a given channel are handled by the same server, ensuring consistency and minimal network consumption.

I've done a case study on Slack's real-time messaging architecture on my blog here.

Consistent hashing is leveraged in several distributed systems use cases, such as balancing load across a cluster (we've already discussed this), data partitioning (data can be partitioned across multiple nodes in a distributed database based on consistent hashing), similarly cache data can be evenly distributed across the nodes of a distributed cache, same for CDN data distributed across the edge servers, task assignment in parallel processing and so on.

Here are a couple more real-world use cases: Spotify leverages consistent hashing to distribute the load of millions of users across their servers. Discord leverages it to scale to millions of users. In addition, distributed systems such as Couchbase, Riak, DynamoDB, Cassandra and many more leverage the technique to partition data across their distributed nodes.

If you wish to delve into the fundamentals of how distributed services work, how large-scale services manage their database growth, how they deal with global concurrent traffic and distributed data conflicts, including how they are deployed globally and much more, check out the Zero to Software Architecture Proficiency learning path.

It's a series of three courses authored by me intended to help you master the fundamentals and the intricacies of designing distributed systems like ESPN, Netflix, YouTube, and more.

Additionally, if you wish to learn to code distributed systems from the bare bones, I am running a series on it in this newsletter. Do check it out here.

If you wish to practice coding distributed systems like Redis, Docker, Git, a DNS server and more from the bare bones in the programming language of your choice, check out CodeCrafters (Affiliate). With their hands-on courses, you not only gain an in-depth understanding of distributed systems and advanced system design concepts but can also compare your project with the community and then finally navigate the official source code to see how it’s done.

You can use my unique link to get 40% off if you decide to make a purchase.

If you found this newsletter post helpful, consider sharing it with your friends for more reach. If you are reading the web version of this post, consider subscribing to get my posts delivered to your inbox as soon as they are published.

Implementing consistency via GTID (Global Transaction Identifier) is another interesting topic I'll be delving into in my future posts. It's been added to my list.

You’ll find the previous system design case study here:

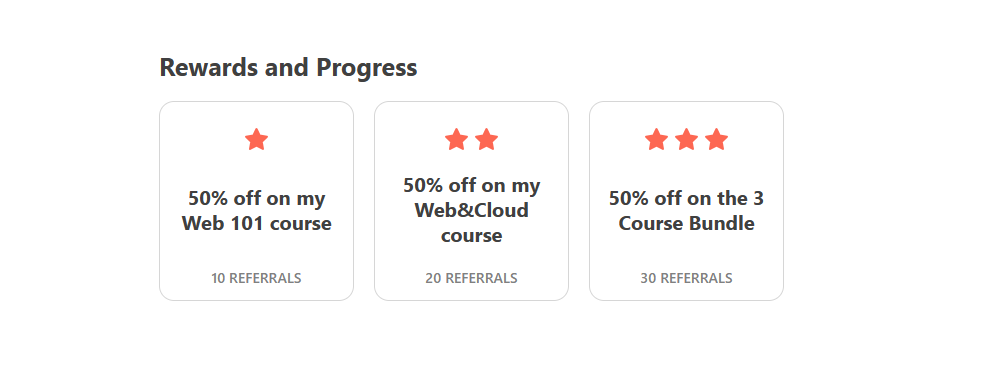

You can get a 50% discount on my courses by sharing my posts with your network. Based on referrals, you can unlock course discounts. Check out the leaderboard page for details.

You can find me on LinkedIn & X and can chat with me on Substack chat as well. I'll see you in the next post. Until then, Cheers!