System Design Case Study#3: Distributing Our Database In Different Cloud Regions Globally To Manage Load & Latency

Picture a scenario where we launch an online multiplayer card game based on a regional fictional character. The game enables players to trade cards, explore new and unique cards in the system, purchase them, participate in a battle royale mode, and so on.

Right after the launch, the game starts gaining traction in our country and the neighboring countries. Our service is initially deployed in a specific cloud region, for instance, the Asia Pacific, and is doing well from the latency standpoint.

Our MMO (Massively Multiplayer Online) Gaming Service Architecture

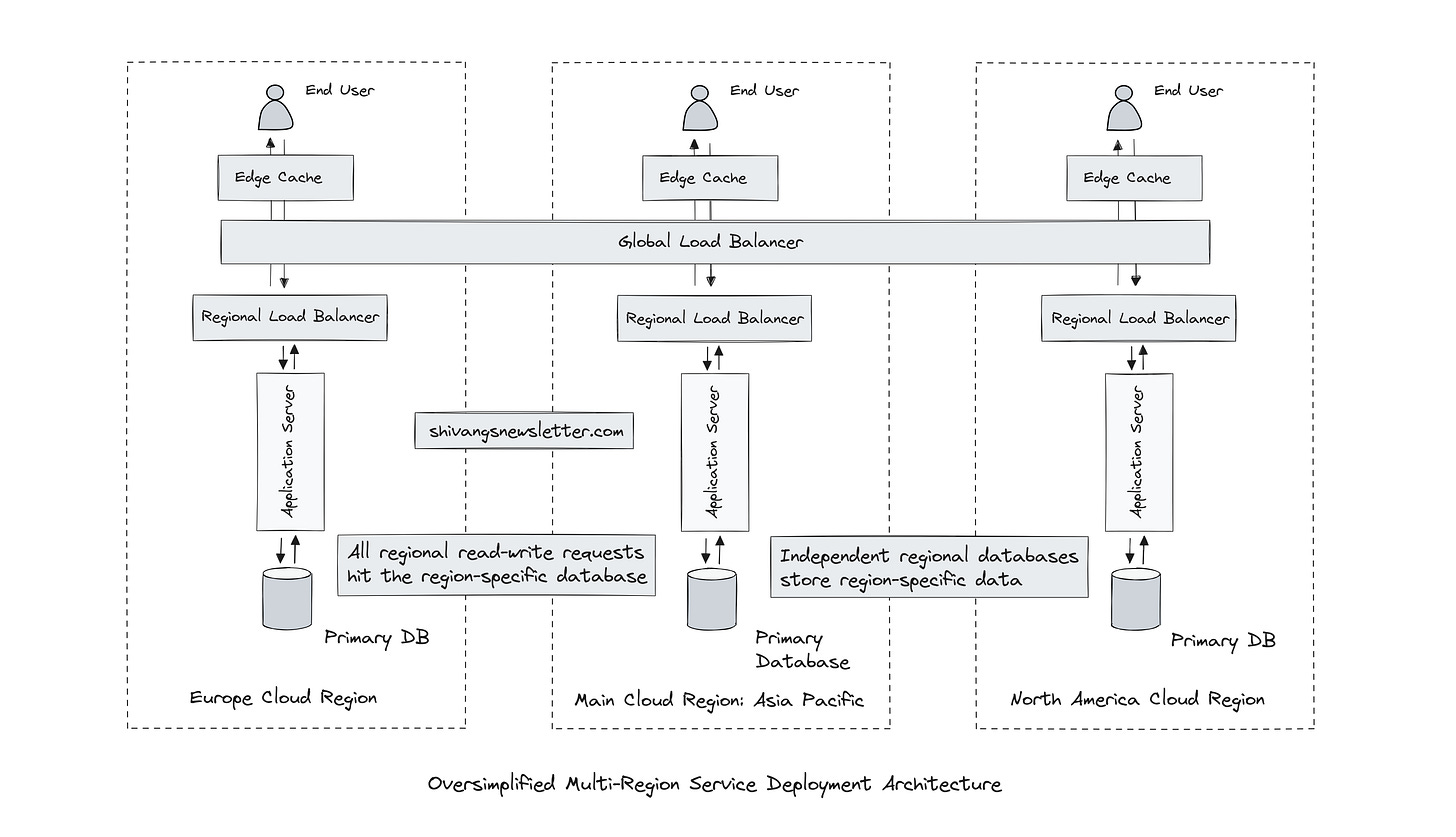

Here is the oversimplified architecture of our service:

We have a CDN at the edge for delivering static assets to the end users at blazing speed. The application server handles the game logic, player interactions, serving dynamic content, etc. And the database stores the game state and the player profile data.

There can be other components in our architecture as well, like the cloud object store for storing blob content, a search service for finding cards, an additional game server for matching players of the same skill, etc. But let's just keep things simple for now.

The aim of this post is to focus on different ways our database can be distributed in different cloud regions to expand our service globally as opposed to deeply delving into the architecture of an MMO game.

Over time, since our game is the best card game ever, it starts to gain global recognition and blows up beyond our expectations. Concurrent users from different cloud regions flock to our website.

Tackling this sudden traffic influx needs architectural and infrastructural changes. Our current infrastructure wasn't built to handle the global traffic. Due to excessive traffic, players in the main cloud region, along with those of the other cloud regions, naturally experience higher response latency.

Requests from different cloud regions have additional network latency when being routed to the main cloud region for read-writes. The requests originating from the main cloud region experience latency due to the infrastructure showing cracks due to exposure to exponential traffic beyond its capacity.

And this goes without saying: the spike in latency (being a crucial factor in our online game) deteriorates the in-game experience.

What do we do?

To deal with this, we distribute our architecture across several cloud regions to cut down the response latency and the load on the main cloud region.

Cross-Cloud Region Distributed Service Architecture

We will move the CDNs at the edge locations in the respective cloud regions. The application servers will be deployed in every cloud region across one or multiple availability zones.

We will have a global load balancer and a regional load balancer. The global load balancer routes traffic across different cloud regions. If a certain cloud region goes down, the global load balancer routes the traffic of that region to the nearest cloud region. The regional load balancers route traffic through different availability zones and data centers.

If you wish to understand how the request flows through the CDN and load balancers to application servers, check out the CDN and Load balancers (Understanding the request flow) post I've published on my blog.

What about the database? Do we have cloud-region-specific independent database deployments storing data only for that region? Should we shard our database, spreading out shards in different cloud regions? Or should we have read replicas in different cloud regions just for the reads?

Well, things are not so straightforward here. This largely depends on the business use case.

Distributing Our Database Across Different Cloud Regions

Read Replicas

Read replicas are copies of the primary database (in the main cloud region) deployed in different cloud regions.

With them, the user's read requests from a certain cloud region don't hit the main cloud region database; rather receive responses from their specific cloud region read replica.

This reduces read latency significantly, in addition to balancing database query load globally. Also, read replicas act as backups of the primary database. They function as a safeguard against data loss in case the primary cloud region faces a disaster.

All the read replicas globally get updated asynchronously with the primary database as it receives the application writes. So, we might account for a bit of lag in the data consistency between the replicas and the primary database.

The replicas can also be synchronously updated to make the system strongly consistent, but then the users will only be able to perform queries once the replicas are updated.

Should we use read replicas in our use case? We'll discuss this, but before that, let's understand the other architectural options.

Region-Specific Independent Distributed Databases

As opposed to being read-heavy, if most global queries are write-heavy, we would have to deploy cloud-region-specific independent databases to cut down on the write latency and load. This would avert all the write queries to converge towards the primary cloud region database.

Region-specific database deployments can also be scaled individually based on the regional load, thus adding flexibility to our system architecture.

Read-replicas in write-heavy queries scenario won't do much good since all the write queries would still have to move to the primary cloud region to perform writes.

There is one more thing: global services often have to comply with local government data laws that typically require them to keep region-specific data within that region. This necessitates us to have region-specific database deployments.

Region-specific independent database deployments sound good, but they may bring along the additional complexity of synchronizing global writes to some extent at a system level as a whole, if we are maintaining a global game state (which we typically would require).

For instance, if we are maintaining player rankings at a global level comprising different cloud regions, writes made in respective cloud regions need to be synchronized with the primary database in the main cloud region for some specific user events.

We have to account for the application data consistency requirements when having such an architecture because global concurrent writes will always be eventually consistent as opposed to being strongly consistent.

Synchronizing Data Across Global Nodes Of A Distributed Database

As discussed above, the actual complexity lies in synchronizing writes across several nodes of a distributed database deployed across the globe. This would involve critical factors such as latency requirements, system availability, network issues, data consistency requirements, and so on.

Different distributed databases and distributed systems, in general, leverage different techniques and strategies to sync data, such as quorum-based data consistency techniques, leveraging different consensus algorithms, such as Raft, Paxos, etc., data versioning mechanisms with vector clocks, and so on.

This topic deserves a dedicated article; I have added this to my list and will be delving into it in my future posts.

If you wish to delve into the details of how large-scale services handle database growth, how they are deployed in different cloud regions across the globe, the criticality of understanding application data and data access patterns when designing distributed systems, the techniques and intricacies of scaling databases, different data models, distributed transactions, how distributed systems handle data conflicts, cloud infrastructure on which our apps are deployed and much more, check out the Zero to Software Architecture Proficiency learning path.

It's a series of three courses authored by me intended to help you master the fundamentals and the intricacies of designing distributed systems like ESPN, Netflix, YouTube, and more.

Hybrid Distributed Database Architecture With Read Replicas & Region-Specific Independent Active-Active Deployments

Running distributed services is complex. We know that. There can be n number of use cases warranting a hybrid architecture as opposed to sticking with one specific architecture or deployment strategy.

In a scenario where certain features of our game are unavailable in certain cloud regions due to legal adherence and only read queries originate from that region, just having read replicas could work there. This will reduce the data sync complexities in our architecture to a certain extent.

Out of those regions, a few could necessitate keeping data locally, requiring us to set up a dedicated regional database. In this scenario, we need to have a hybrid architecture, with a mix of the two optimizing resource usage.

Sharding Our Database Across Different Cloud Regions

Upto this point, we discussed two ways to reduce hits on the primary database in the main cloud region. One is having region-specific read replicas and the other is to have independent cloud-region-specific database deployments.

There is one more way to configure or distribute our database across the globe, which is by sharding it across different cloud regions.

This is different from independent deployments. Sharding involves breaking down a big database into smaller chunks called shards to reduce the response times and have better management.

In this setup, the shards are globally distributed across different cloud regions, making the architecture more scalable and flexible. The data based on our use case can be sharded based on geography, user attributes, user involvement in certain game features or some other key.

In a sharded architecture, a shard in a certain cloud region can receive hits from a different cloud region since the database is sharded as opposed to just holding data of a specific cloud region.

A shard, as opposed to acting independently only for a certain specific cloud region and containing data only for that region, is a logical part of a bigger database. The player data and game state of a certain cloud region may be stored in the primary cloud region database, depending on the application requirements.

Picking the Right Database Distribution Strategy

Which architectural strategy to pick out of three (Read replicas, Cloud-region-specific independent database deployments and Sharding the database across cloud regions) or having a hybrid architecture (a mix of two or all three) largely depends on our business requirements.

When designing our system, several factors need to be considered, such as traffic patterns, application consistency requirements, latency tolerance, operational costs and overhead, local data compliance, etc.

If most global traffic is read-heavy and write scenarios are not so latency-sensitive, read replicas could be a good fit.

To comply with local data laws, we may have to move our data from the main cloud region to respective cloud regions and availability zones. In this scenario, independent cloud-region-specific deployments will come into play.

If the use case is latency sensitive, we have no option other than to move the data close to the users either via regional sharding or independent deployments.

Speaking of low-latency data access, managed serverless databases are picking up in developer circles of late. They manage the connection pooling, which is a crucial element when handling large concurrent traffic, including the infrastructure. They offer ready-made data access APIs and are a good fit for thick client services. I'll be discussing it in my future posts. Stay tuned.

Understanding Trade-offs In System Architecture

To understand picking the right database distribution strategy further, let's quickly go over a scenario from our card gaming service, for instance, deployed in four cloud regions. Our game’s battle royale mode involves players battling in real-time. This will involve super-low-latency responses to user events to keep the engagement and in-game experience high.

We can set up region-specific databases to ensure low-latencies. But at the same time, we also have to deploy a feature that provides highly or strongly consistent global user rankings in real-time as the players complete in-game events to keep them motivated and playing.

This would require our system deployed globally to be strongly consistent. This means minimizing the writes to a few cloud regions or availability zones to reduce eventually consistent data as much as possible.

Now, this is a trade-off between maintaining strong system consistency and ensuring low system latency and availability.

P.S. We are not considering adherence to local data laws in this scenario.

We need to spread out writes globally to ensure super-low-latency but at the same time, we have to minimize writes to a few regions or a single region to keep the data strongly consistent. This is a trade-off. Designing this system architecture requires careful thinking.

So, you see, most architectural decisions are a trade-off. There is no one-size-fits-all. No silver bullet. I want you to tell me in the comments how you would design such a system :)

If you wish to learn to code distributed systems from the bare bones, I am running a series on it in this newsletter. Do check it out here.

Additionally, if you wish to practice coding distributed systems like Redis, Docker, Git, a DNS server and more from the bare bones in the programming language of your choice, check out CodeCrafters (Affiliate). With their hands-on courses, you not only gain an in-depth understanding of distributed systems and advanced system design concepts but can also compare your project with the community and then finally navigate the official source code to see how it’s done.

You can use my unique link to get 40% off if you decide to make a purchase.

If you found the content insightful, do share it with your friends for more reach and consider subscribing to my newsletter if you are reading the web version of this newsletter post.

You’ll find the previous system design case study here:

You can get a 50% discount on my courses by sharing my posts with your network. Based on referrals, you can unlock course discounts. Check out the leaderboard page for details.

You can find me on LinkedIn & X and can chat with me on Substack chat as well.

I'll see you in the next post. Until then, Cheers!