Kafka tiered-storage at Uber and using it as a system of record

Kafka is heavily used in Uber's tech stack, serving several critical use cases, including batch and real-time systems.

The total storage in Kafka clusters depends on factors like the number of topic partitions, throughput and retention configuration. To scale the cluster storage more nodes need to be added that come along with their additional CPU and memory, which is not ideally required.

In this scenario, the storage and compute are tightly coupled, which causes issues with scalability, flexibility and deployability. Furthermore, storing data in local cluster nodes is expensive and increases cluster complexity, in contrast to storing data in remote storage, such as a cloud object store.

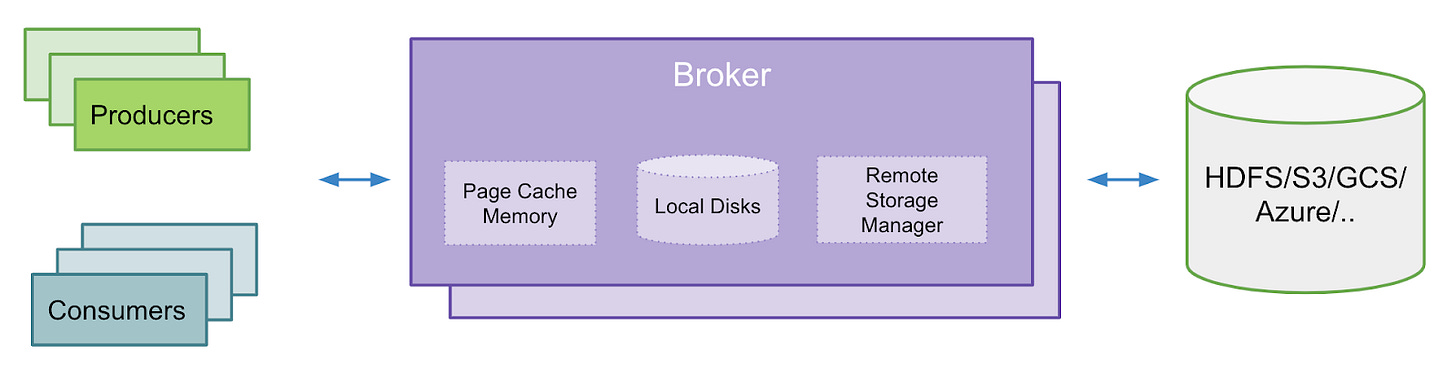

To tackle this, Uber Engineering proposed Kafka tiered storage, where the Kafka broker would have two tiers of storage called the local and remote, with respective data retention policies.

The local storage is the local cluster storage, while the remote tier is the extended storage with a significantly longer retention period in contrast to the local tier storage.

Kafka natively supporting extended storage, decouples the storage and compute, decreases the load on the local storage and enables systems to store data for a significantly longer duration with less complexity and optimized costs.

Separating storage and compute is a common practice in modern web architecture. I'll be delving into this in my future posts. For a detailed read on Kafka's tiered-storage architecture at Uber, check out this Uber engineering blog post.

With persistent storage natively available and compute and storage decoupled powered by the extended tiered storage architecture, can we use Kafka as a system of record or a database?

Kafka is a distributed append-only immutable log. The immutable log is helpful in use cases where the history of events needs to be retained and replayed with high throughput.

A database has database-specific features that store the application state. It's evident that Kafka cannot fit in use cases where we require a dedicated database. However, if we are already using Kafka in our architecture and it serves our persistent requirements well, we may use it as a system of record and avoid plugging in a dedicated database for simplicity.

KOR Financial, a financial services startup, leveraged this approach using Kafka as the system of record instead of relying on relational databases to store data.

Their data streaming architecture powered by Kafka captures events in addition to the system state handling hundreds of petabytes of data cost-effectively. They leverage Confluent Cloud as an extended storage platform to store data for as long as they want.

Using Kafka as a database

Furthermore, Confluence has an excellent article on if Kafka can be used as a database and it's a recommended read.

They discuss a CRUD service that streams all the CRUD events into a Kafka producer that further updates the database and streams the event to a Kafka Customer topic.

During the update and delete operations, the database has a final state every time, unlike Kafka, where the update/delete events are appended in the log as new records in a sequence as opposed to a single source of data being updated or deleted with a final state like in a database.

The article further discusses the use of ksqlDB (built on top of Kafka) as an alternative to the database in the system architecture. Do give it a read.

If you wish to go from zero to confidently contributing to system design discussions at your workplace, making informed decisions while having a firm grasp on the fundamentals, regardless of your current role and experience level, check out the Zero to Mastering System Architecture learning path that educates you step by step on web architecture, cloud computing, the infrastructure supporting scalable web services and distributed system design, starting right from zero.

To become the best in your game as a product developer, separating yourself from the chaff with an understanding of the nuances of the trade, check out this resource.

This post touched upon how businesses are leveraging Kafka's data storage features and how those compare with a traditional database. If you found this post insightful, do share the web link with your network for more reach. You can connect with me on LinkedIn & X in addition to replying to this post.

I'll see you around in my next post. Until then, Cheers!

Insightful. Separating compute & storage is indeed cost-effective